|

Posted by Abhishek R. S. on 2024-07-23, updated on 2024-07-31 The blog is about...Creating a serverless deployment using AWS Lamdba function in Python with Kinesis streaming for serving a machine learning model. The project can be found in the following Github repo ML_NY_TAXI/aws_lambda_kinesis . Some of the benefits of using data streams is that it can support one to many relationship i.e., one publisher to many subscribers. Another important benefit is the retention period of data on streams is much larger and can be utilized for certain applications where http post request form of web service cannot be used because of lower request timeout. 1.1) IAM setupAlthough, this is out of the scope of this blogpost, it is a standard practice to setup IAM users, groups and roles. Create an AWS user if not present already. Create a group for AWS Lambda users, Kinesis users, API Gateway admin, ECR users. Add user to these groups. Add apporpriate policies to these groups by giving full access to relevant things. Create a role for lambda kinesis and add the policy for lambda kinesis execution access. 1.2) Setting up a Kinesis stream for ride and prediction events

A Kinesis stream can be created in AWS Kinesis. Refer to the following steps

1.3) Creating a docker image for the lambda functionA list of publicly available docker images for AWS can be found in Amazon Elastic Container Registry (ECR) public gallery. One can search for "lambda python" and find a docker image for appropriate python version under Image tags. This image can be used as a base docker image to build a custom docker image for a lambda function.

Unlike a typical

To build the docker container, use the following command,

1.4) Creating a repo for docker container on AWS ECRCreate a private repo for the docker container using the following steps. ECR -> Create repo -> Select private -> Choose a name for the repo -> Create repo

Once the repo is created, go to the repo on AWS, see 1.5) Setting up the Lambda function on AWS with additional configurationsThe AWS Lambda function needs to be set up. This can be done using the following steps. Lambda -> Create function -> Select from container image -> Choose a name for the lambda function -> Choose the container image with browse images -> Choose x86_64 -> Choose existing role and select the role that was created earlier -> Create Add env variables to the lambda function. This can be done in config -> environment variables -> edit Add a trigger for the lambda function by selecting the appropriate data stream that was created earlier. Set a higher timeout like 30 sec. (for initial loading) and a slightly higher memory in Configuration -> General -> Timeout. After applying the above changes and test it, things will work. The first time will take a longer time to run (like > 20 sec.) and the subsequent runs will be much faster (in milli sec.). The following image shows the successful test of the lambda function. 1.6) Attaching additional policies for the Lambda function role

Add an additional policy to the role that was created earlier to allow lambda function access to the S3 bucket, to load the saved model.

This can be done using the following steps

Add an additional policy to the role that was created earlier to allow lambda function to publish predictions to the prediction data stream.

This can be done using the following steps

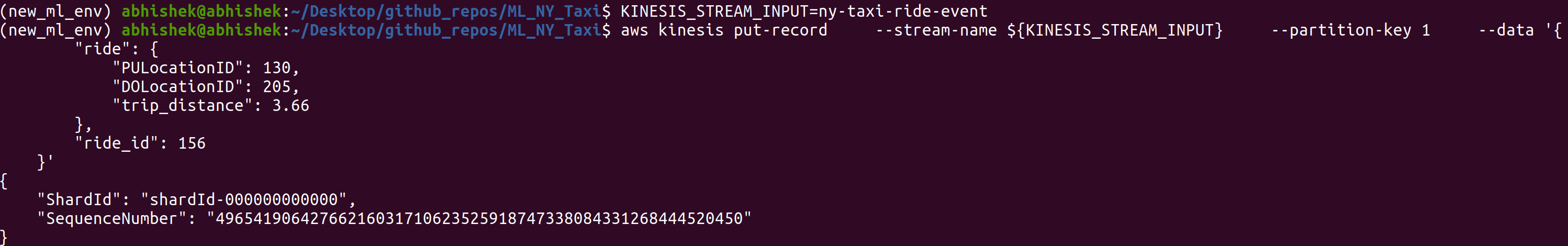

1.7) Verifying the working of the Lambda function in the terminalIn a terminal, run the following commands to send data to input Kinesis stream

The following image shows the success of the publishing data to the Kinesis input stream.

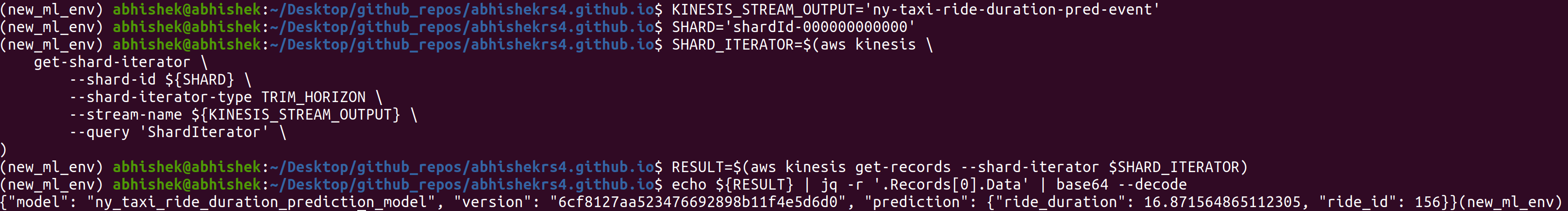

In a terminal, run the following commands to read data from the output Kinesis stream

The following image shows the success of reading the data from the Kinesis output stream and decoding it.

1.8) Monitoring CloudWatch LogsOne can easily look at the CloudWatch logs. This can be done from the lambda function console. Go to Lambda function console -> Monitor -> View CloudWatch logs -> Select the log stream to view the log events 1.9) Challenges faced

Main takeaway

Next Steps

|