|

Posted by Abhishek R. S. on 2024-05-09, updated on 2024-05-21 The blog is about...Workflow orchestration using Prefect. 1.1) Prefect Setup

The installation of Prefect is pretty straight-forward.

Run the following command to install prefect.

1.2) Starting the prefect server

The following command can be used to start the prefect server.

To configure prefect server, run the following command.

The following error may appear.

1.3) Main components of PrefectPrefect can be used for a orchestrating wide range of scalable data pipeline applications. The main advantages of using Prefect as a workflow orchestrator are scheduling, caching, retries, notifications, logging and much more. The main components of a Prefect workflow are task and flow. Flow is like a container for workflow logic whereas a task is a discrete work unit in the workflow. A flow connects tasks that have dependencies on one another. There can also be subflows i.e. a flow within another flow. For tasks that are very expensive, caching can be leveraged when inputs do not change. This will definitely come in handy 💪. Also, retries can be leveraged for both flows and tasks. 1.4) Using Prefect for workflow orchestrationI developed a simple data ingestion workflow using Prefect to understand how it can be leveraged. This is available in my Github repo Data_Ingestion_Prefect.

The data ingestion pipeline developed in this project uses the following workflow.

It downloads the The flow is divided into four different tasks.

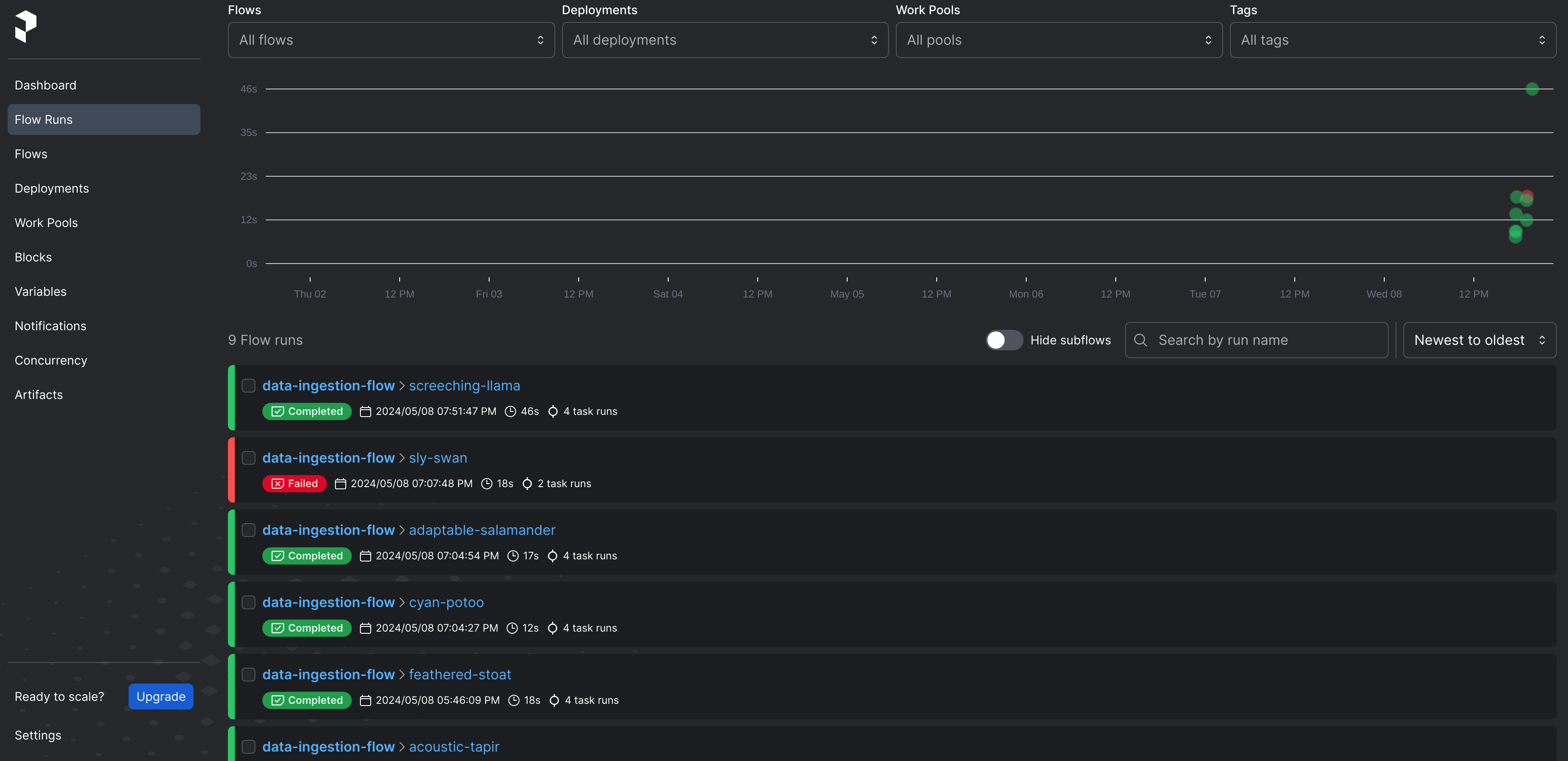

To understand how things worked, I tried two scenarios where the workflow was successful and the workflow failed. To test first scenario where the workflow succeeds was a straight-forward one. To test the other scenario, I just tried to download a non-existent dataset file from the dataset webpage. I have included some dashboard visualizations from my experiments. The following image shows a Prefect dashboard visualization of the registered flows.

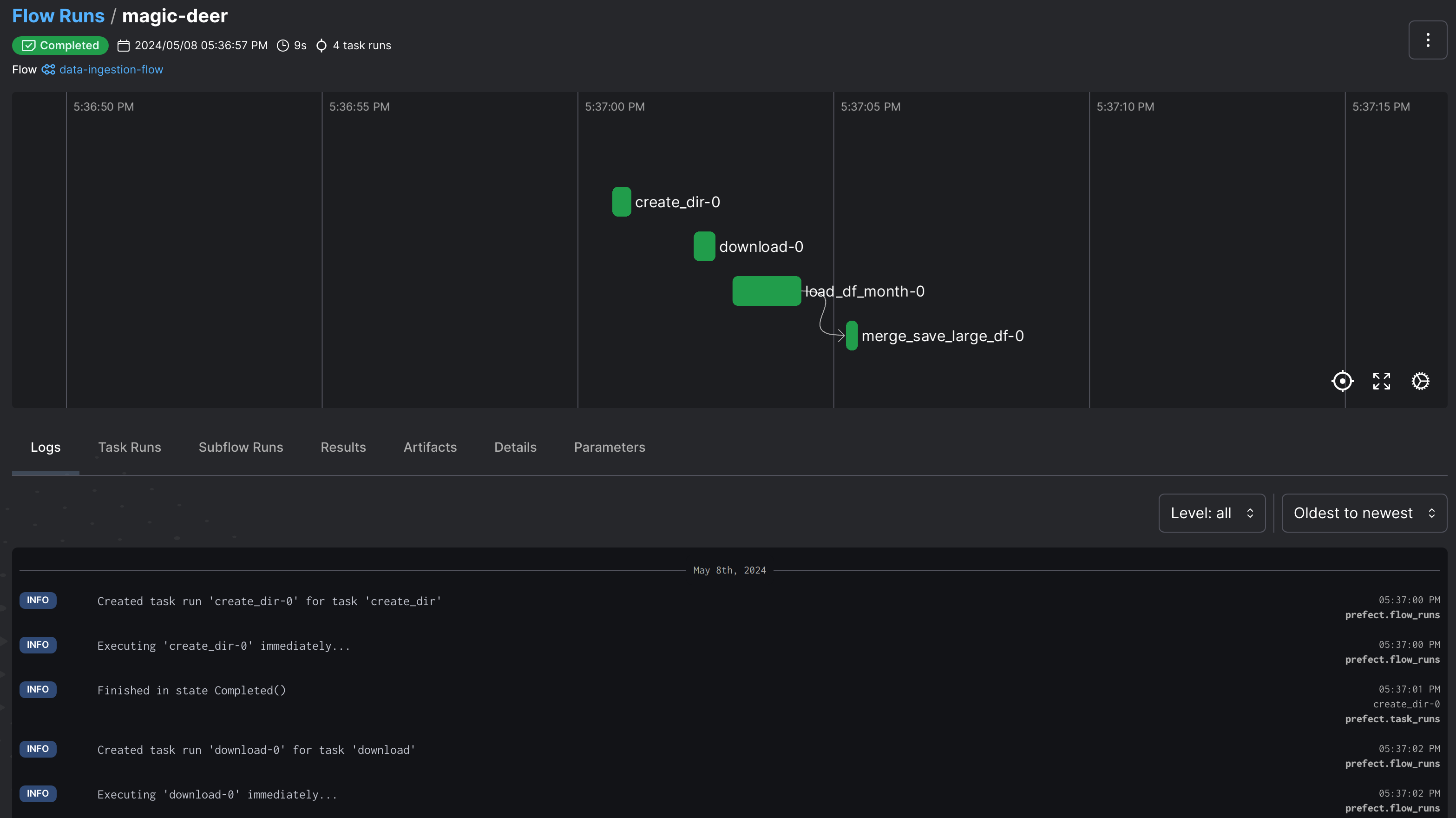

The following image shows a Prefect dashboard visualization of a successful flow.

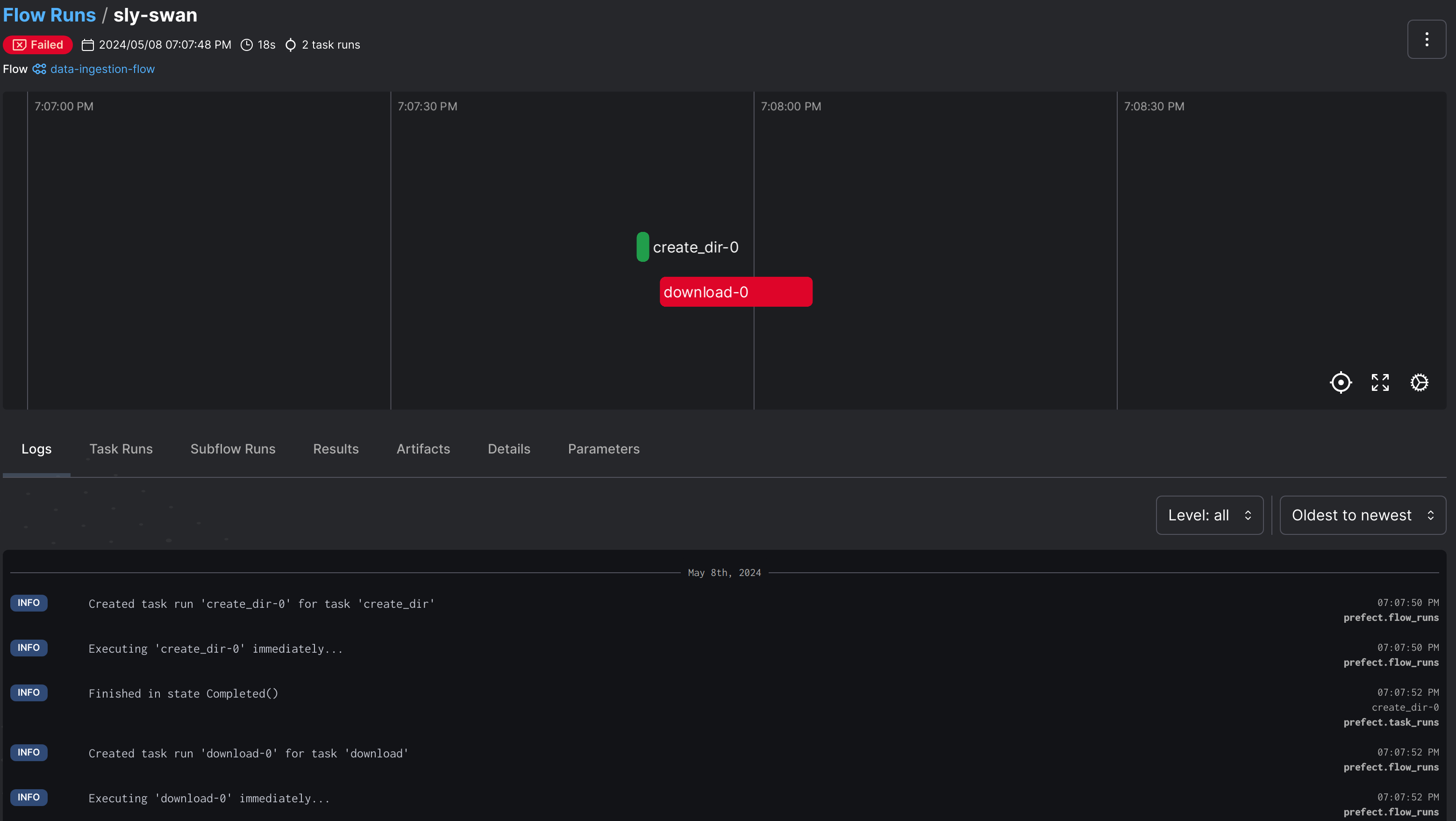

The following image shows a Prefect dashboard visualization of a failed flow.

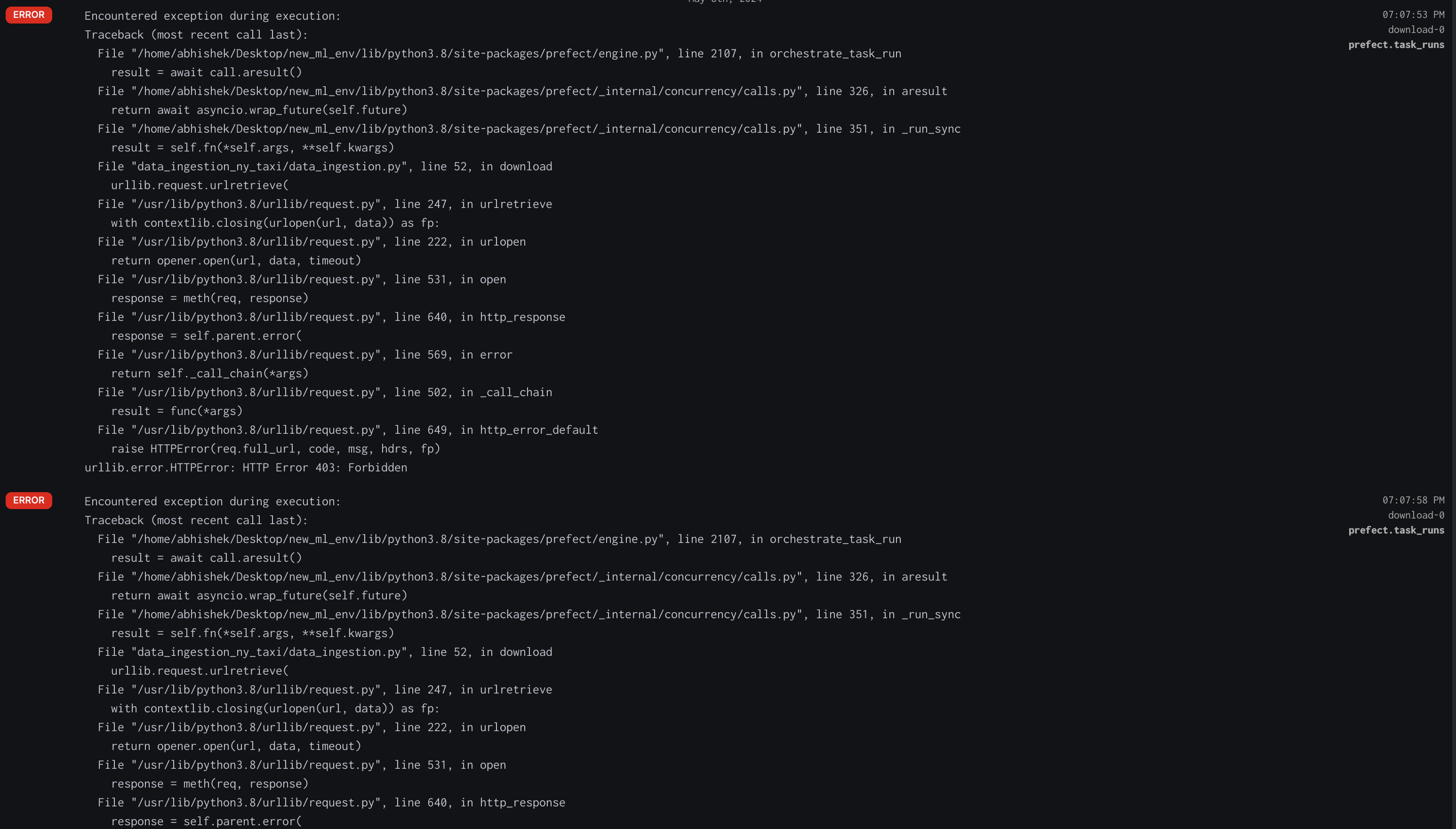

The following image shows a Prefect dashboard logs of the failed retries for a failed flow.

1.5) Creating a local deployment

Main takeawayI learned to perform workflow orchestration using Prefect with the data ingestion pipeline example. The main advantage is the simplicity of the usage of Prefect with just Python decorators. The sophistication that Prefect has to offer like the caching, retries, scheduling, support for cloud etc., can only be appreciated by using it more and more. This blog can be used as a reference for deploying complex MLOps and data pipelines using Prefect for orchestration. To deploying more complex MLOps and data pipelines with Prefect orchestration now 😄. Next Steps

|