|

Posted by Abhishek R. S. on 2024-05-03, updated on 2024-05-07 The blog is about...This is about setup and usage guide for AWS instance for deploying Machine Learning pipeline. 1) EC2 instance SetupI used MLFlow both locally and on AWS, for tracking. The MLFlow server can be run on a AWS EC2 instance. In this section, I have discussed how to setup EC2 instance for the same. 1.1) Basic InstructionsThe first step is to setup an EC2 instance. This can be done via services -> compute -> EC2 -> launch an instance. Setup the instance with the relevant configuration that is needed.

1.2) Remote login to the EC2 instance

Go to instances and select the instance that you just created. Use the public IPv4 address for login.

The following command can be used for remote login.

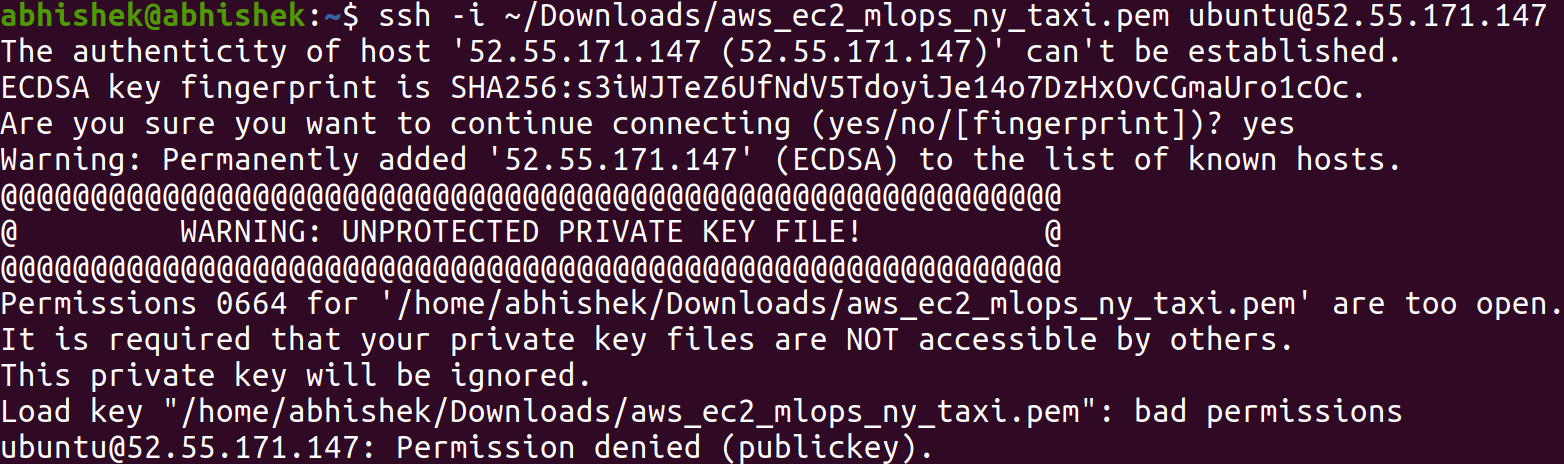

I faced one error while trying remote login. The following image shows the error.

This can be resolved with a simple permission change for the

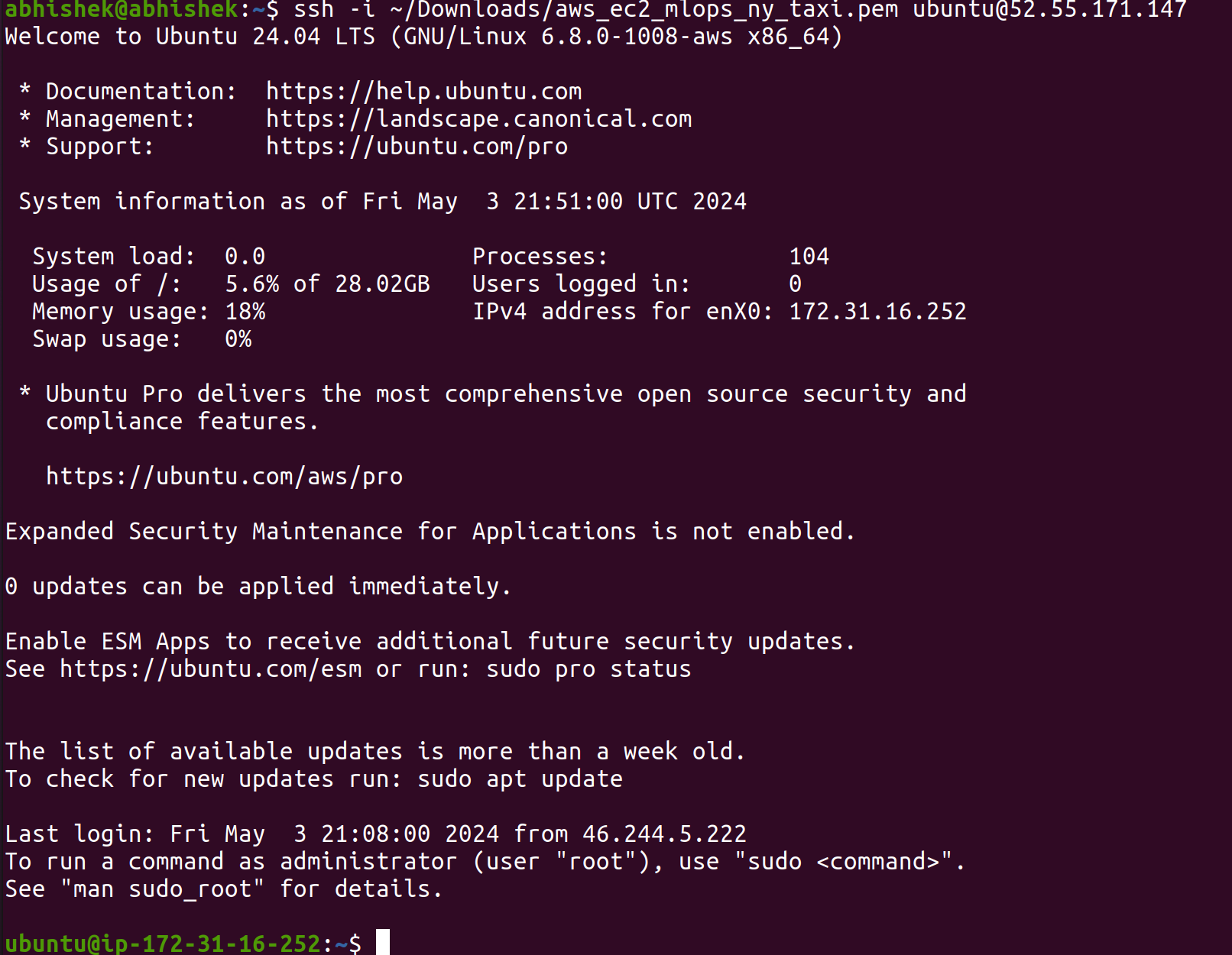

Once you reach this stage, you can do whatever you want. The next step would obviously

be to setup python virtual env and install relevant packages. An important package to

install is the 1.3) Setting up AWS key and secret access keyThis can be done via account -> security credentials -> create access key -> copy the access key and secret access key. 1.4) Install awscli and boto3These will have to be installed even on a local machine. This is the case even if the training happens on a local machine and the tracking and storage happens on the AWS cloud.

Run 1.5) Run MLFlow server

Run the following command to start the MLFlow server.

If you get the below mentioned error,

Then make changes to the following file

If you get the following error

Add an inbound rule for the security group to allow access to port 2) Configuring an S3 bucketThe model files, requirements and other environment files are stored under artifacts in MLFlow. When using MLFlow, I have tried storing these locally as well as on the Amazon S3 bucket. The configuration for an S3 bucket can be done via services -> storage -> S3 -> create bucket. Choose a unique bucket name with default settings. 3) Configuring and setting up an instance of RDSAll the metadata for an ML experiment like params, metrics etc. will be stored in a relational database in MLFlow, if we choose to do so. When using MLFlow, I have tried with SQLite database when running locally. For AWS, I have tried with PostgreSQL. The AWS RDS setup can be done via services -> database -> RDS -> create database.

Main takeawayThis is not my first time setup / use of EC2 instance and S3 bucket 😅 but a first time configuring MLFlow on AWS by myself and configuring RDS. Although I faced some challenges during setting up this infrastructure on AWS, I had a positive learning experience and learned that it is relatively easy to setup and use an AWS EC2 instance + S3 bucket + RDS (PostgreSQL) for configuring MLFlow! This blog can be used for deploying complex ML pipelines on AWS. To deploying complex MLOps pipelines now 😄. Next Steps

|