|

Posted by Abhishek R. S. on 2024-04-15, updated on 2024-04-27 In this project, a FastAPI backend application and a streamlit frontend is deployed to HuggingFaceSpaces.

The deployed API backend application can be found in the HuggingFaceSpaces

Overhead_MNIST.

This can also be found in the GitHub overhead_mnist.

The Overhead MNIST dataset, with ten classes, is used for this project.

A CNN is trained to classify overhead satellite images.

An API is deployed using FastAPI, to which an image can be sent in a

I had to use Readme.md for any HuggingSpace repo contains the metadata.

The app_file is an important field mentioning the application file.

The port 7860 is used for serving docker applications deployed on HuggingFaceSpaces.

Dockerizing a FastAPI application is straight-forward without any major issues. However, I faced some minor issues with the dependencies.

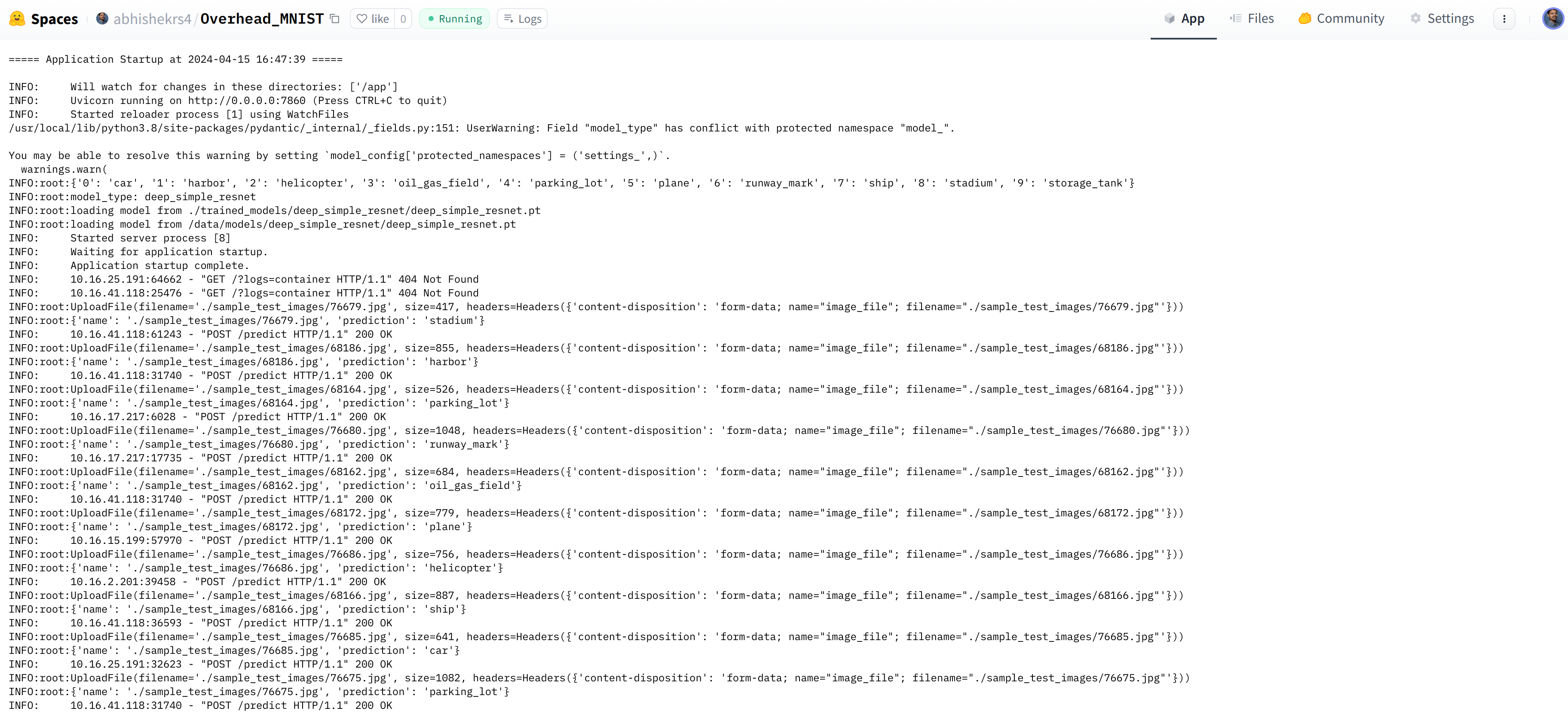

The endpoint link to which the The following image shows a screenshot of the output logs of the backend API.

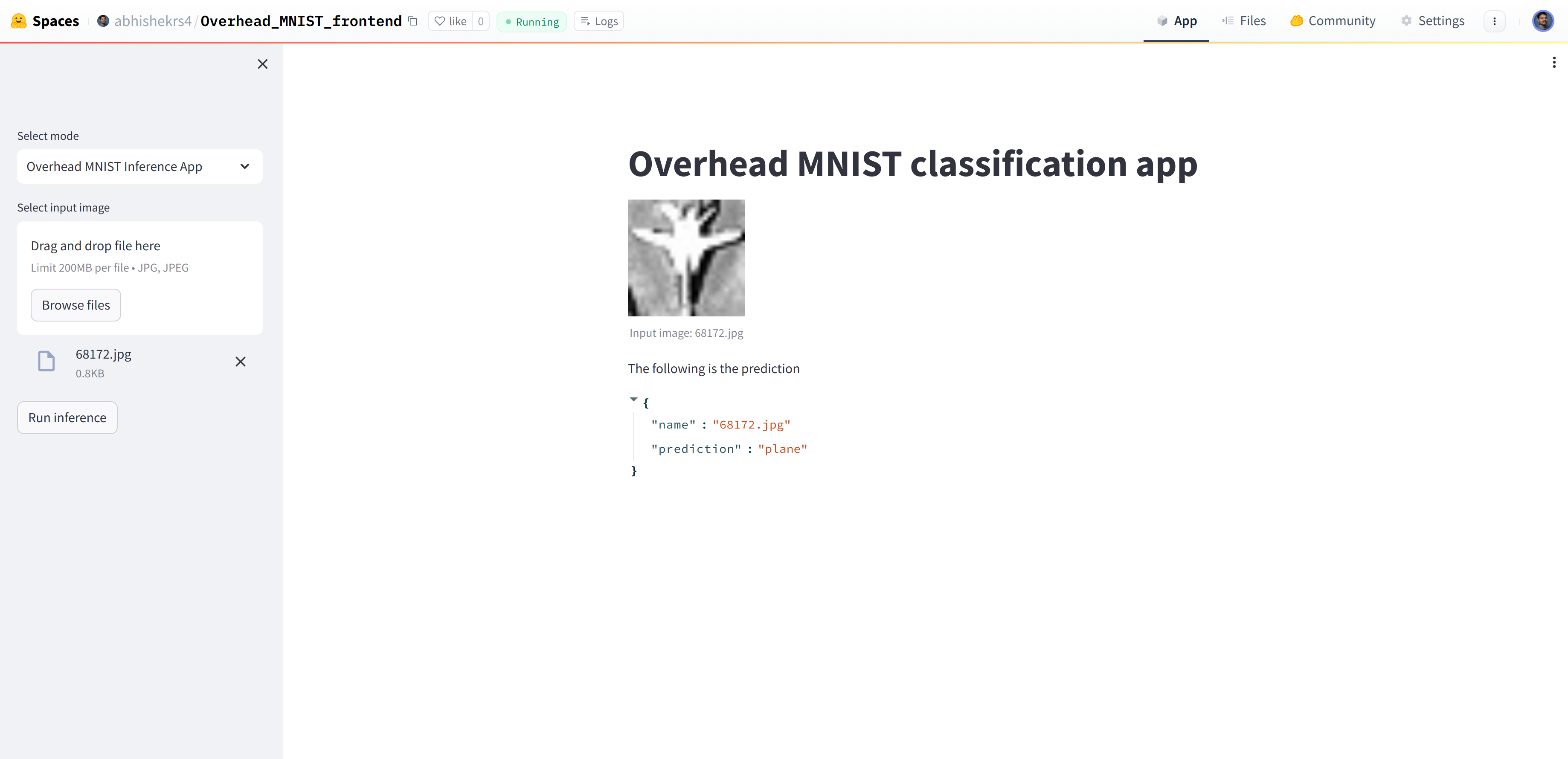

The deployed streamlit frontend application can be found in the HuggingFaceSpaces Overhead_MNIST_Frontend. For the streamlit frontend, I faced minor issues during deployment.

The following image shows a screenshot of the output logs of the frontend.

To summarize, I had a positive learning experience during this project deployment. |